Amazon FSx for Lustre Update: Persistent Storage for Long-Term, High-Performance Workloads

Last year I wrote about Amazon FSx for Lustre and told you how our customers can use it to create pebibyte-scale, highly parallel POSIX-compliant file systems that serve thousands of simultaneous clients driving millions of IOPS (Input/Output Operations per Second) with sub-millisecond latency.

As a managed service, Amazon FSx for Lustre makes it easy for you to launch and run the world’s most popular high-performance file system. Our customers use this service for workloads where speed matters, including machine learning, high performance computing (HPC), and financial modeling.

Today we are enhancing Amazon FSx for Lustre by giving you the ability to create high-performance file systems that are durable and highly available, with three performance tiers, and a new, second-generation scratch file system that is designed to provide better support for spiky workloads.

Recent Updates

Before I dive in to today’s news, let’s take a look at some of the most recent updates that we have made to the service:

Data Repository APIs – This update introduced a set of APIs that allow you to easily export files from FSx to S3, including the ability to initiate, monitor, and cancel the transfer of changed files to S3. To learn more, read New Enhancements for Moving Data Between Amazon FSx for Lustre and Amazon S3.

SageMaker Integration – This update gave you the ability to use data stored on an Amazon FSx for Lustre file system as training data for an Amazon SageMaker model. You can train your models using vast amounts of data without first moving it to S3.

ParallelCluster Integration – This update let you create an Amazon FSx for Lustre file system when you use AWS ParallelCluster to create an HPC cluster, with the option to use an existing file system as well.

EKS Integration – This update let you use the new AWS FSx Container Storage Interface (CSI) driver to access Amazon FSx for Lustre file systems from your Amazon EKS clusters.

Smaller File System Sizes – This update let you create 1.2 TiB and 2.4 TiB Lustre file systems, in addition to the original 3.6 TiB.

CloudFormation Support – This update let you use AWS CloudFormation templates to deploy stacks that use Amazon FSx for Lustre file systems. To learn more, check out AWS::FSx::FileSystem LustreConfiguration.

SOC Compliance – This update announced that Amazon FSx for Lustre can now be used with applications that are subject to Service Organization Control (SOC) compliance. To learn more about this and other compliance programs, take a look at AWS Services in Scope by Compliance Program.

Amazon Linux Support – This update allowed EC2 instances running Amazon Linux or Amazon Linux 2 to access Amazon FSx for Lustre file systems.

Client Repository – You can now make of use Lustre clients that are compatible with recent versions of Ubuntu, Red Hat Enterprise Linux, and CentOS. To learn more, read Installing the Lustre Client.

New Persistent & Scratch Deployment Options

We originally launched the service to target high-speed short-term processing of data, and as a result until today FSx for Lustre provided scratch file systems which are ideal for temporary storage and shorter-term processing of data — Data is not replicated and does not persist if a file server fails. We’re now expanding beyond short-term processing by launching persistent file systems, designed for longer-term storage and workloads, where data is replicated and file servers are replaced if they fail.

In addition to this new deployment option, we are also launching a second-generation scratch file system that is designed to provide better support for spiky workloads, with the ability to provide burst throughput up to 6x higher than the baseline. Like the first-generation scratch file system, this one is great for temporary storage and short-term data processing.

Here is a table that will help you to chose between the deployment options:

| Persistent | Scratch 2 | Scratch 1 | |

| API Name |

PERSISTENT_1 |

SCRATCH_2 |

SCRATCH_1 |

| Storage Replication | Same AZ | None | None |

| Aggregated Throughput (Per TiB of Provisioned Capacity) |

50 MB/s, 100 MB/s, 200 MB/s | 200 MB/s, Burst to 1,200 MB/s | 200 MB/s |

| IOPS | Millions | Millions | Millions |

| Latency | Sub-millisecond, higher variance | Sub-millisecond, very low variance | Sub-millisecond, very low variance |

| Expected Workload Lifetime | Days, Weeks, Months | Hours, Days, Weeks | Hours, Days, Weeks |

| Encryption at Rest | Customer-managed or FSx-managed keys | FSx-managed keys | FSx-managed keys |

| Encryption In Transit | Yes, when accessed from supported EC2 instances in these regions. | Yes, when accessed from supported EC2 instances in these regions. | No |

| Initial Storage Allocation |

1.2 TiB, 2.4 TiB, and increments of 2.4 TiB | 1.2 TiB, 2.4 TiB, and increments of 2.4 TiB | 1.2 TiB, 2.4 TiB, 3.6 TiB |

| Additional Storage Allocation | 2.4 TiB | 2.4 TiB | 3.6 TiB |

Creating a Persistent File System

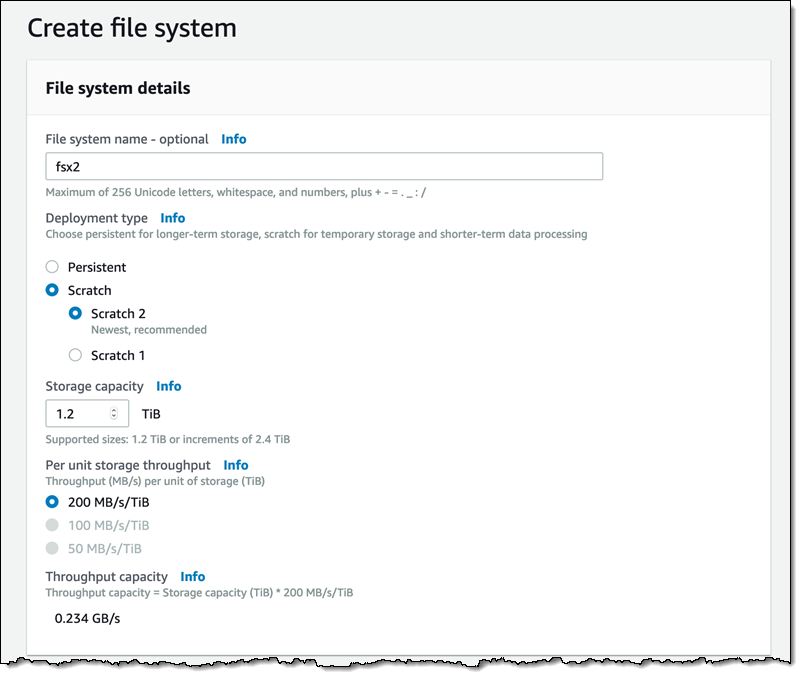

I can create a file system that uses the persistent deployment option using the AWS Management Console, AWS Command Line Interface (CLI) (create-file-system), a CloudFormation template, or the FSx for Lustre APIs (CreateFileSystem). I’ll use the console:

Then I mount it like any other file system, and access it as usual.

Things to Know

Here are a couple of things to keep in mind:

Lustre Client – You will need to use an AMI (Amazon Machine Image) that includes the Lustre client. You can use the latest Amazon Linux AMI, or you can create your own.

S3 Export – Both options allow you to export changes to S3 using the CreateDataRepositoryTask function. This allows you to meet stringent Recovery Point Objectives (RPOs) while taking advantage of the fact that S3 is designed to deliver eleven 9’s of durability.

Available Now

Persistent file systems are available in all AWS regions where Amazon FSx for Lustre is available. Scratch 2 file systems are available in all commercial AWS regions where Lustre is available, with the exception of Europe (Stockholm).

Pricing is based on the performance tier that you choose and the amount of storage that you provision; see the Amazon FSx for Lustre Pricing page for more info.

— Jeff;

Source: AWS News