The AWS DeepRacer League Virtual Circuit is Now Open – Train Your Model Today!

AWS DeepRacer is a 1/18th scale four-wheel drive car with a considerable amount of onboard hardware and software. Starting at re:Invent 2018 and continuing with the AWS Global Summits, you have the opportunity to get hands-on experience with a DeepRacer. At these events, you can train a model using reinforcement learning, and then race it around a track. The fastest racers and their laptimes for each summit are shown on our leaderboards.

New DeepRacer League Virtual Circuit

New DeepRacer League Virtual Circuit

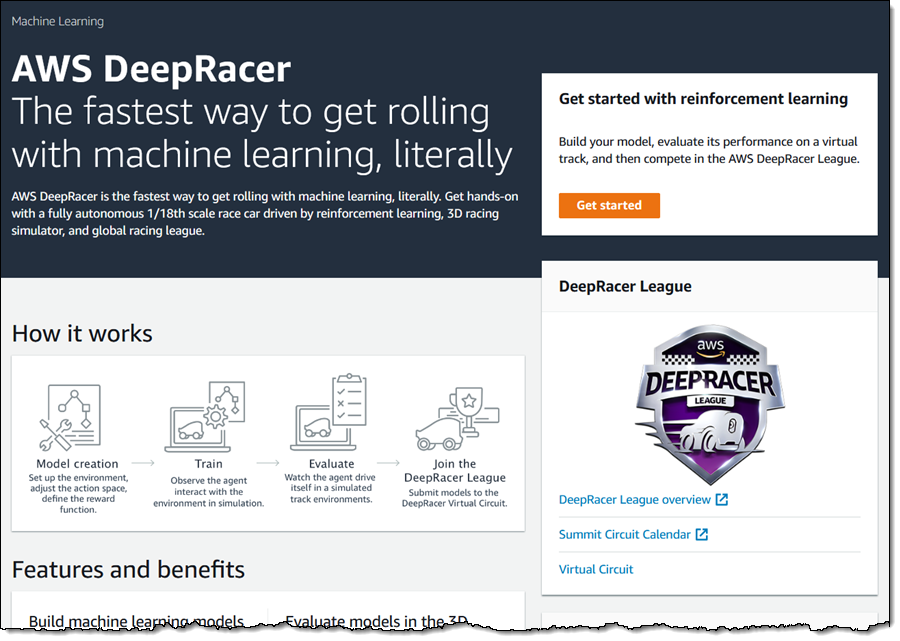

Today we are launching the AWS DeepRacer League Virtual Circuit. You can build, train, and evaluate your reinforcement learning models online and compete online for some amazing prizes, all from the comfort of the DeepRacer Console!

We’ll add a new track each month, taking inspiration from famous race tracks around the globe, so that you can refine your models and broaden your skill set. The top entrant in the leaderboard each month will win an expenses-paid package to AWS re:Invent 2019, where they will take part in the League Knockout Rounds, with a chance to win the Championship Cup!

New DeepRacer Console

We are making the DeepRacer Console available today in the US East (N. Virginia) Region. You can use it to build and train your DeepRacer models and to compete in the Virtual Circuit, while gaining practical, hands-on experience with Reinforcement Learning. Following the steps in the DeepRacer Lab that is used at the hands-on DeepRacer workshops, I open the console and click Get started:

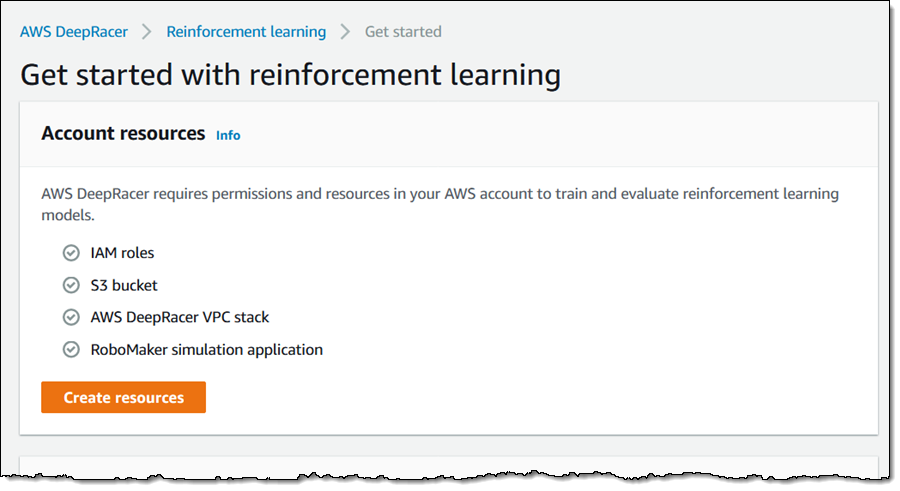

The console provides me with an overview of the model training process, and then asks to create the AWS resources needed to train and evaluate my models. I review the info and click Create resources to proceed:

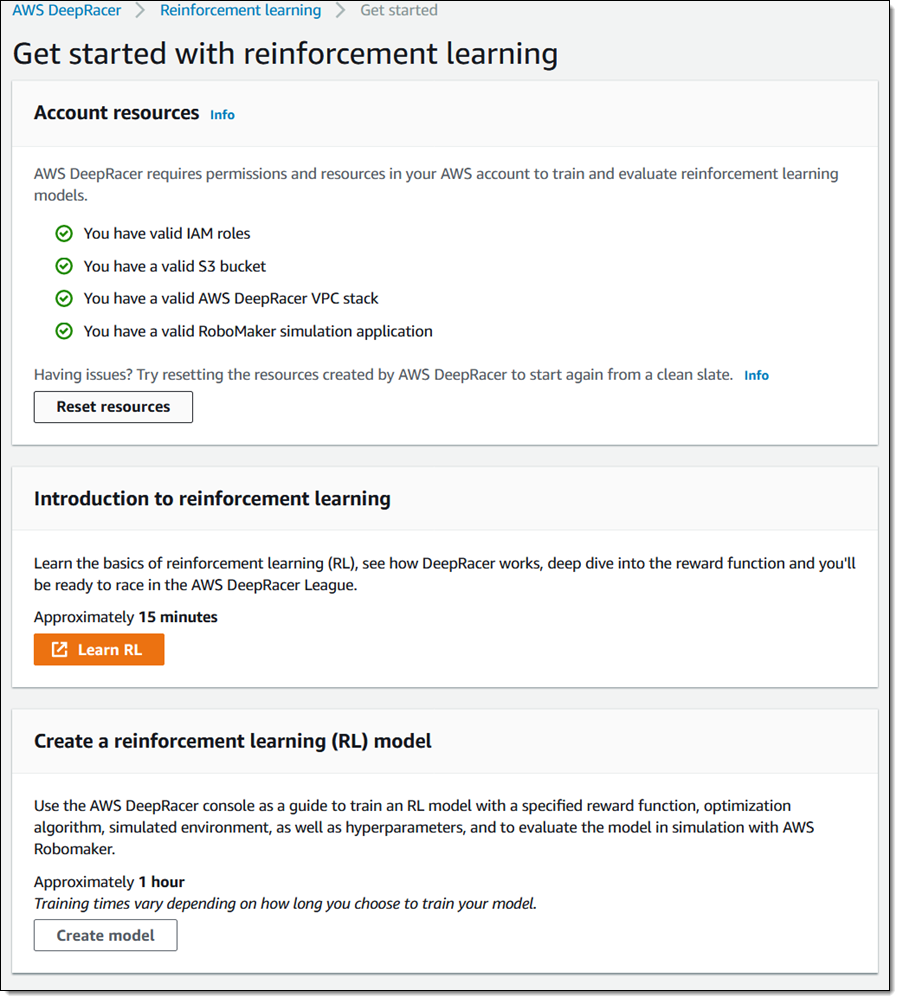

The resources are created in minutes (I can click Learn RL to learn more about reinforcement learning while this is happening). I click Create model to move ahead:

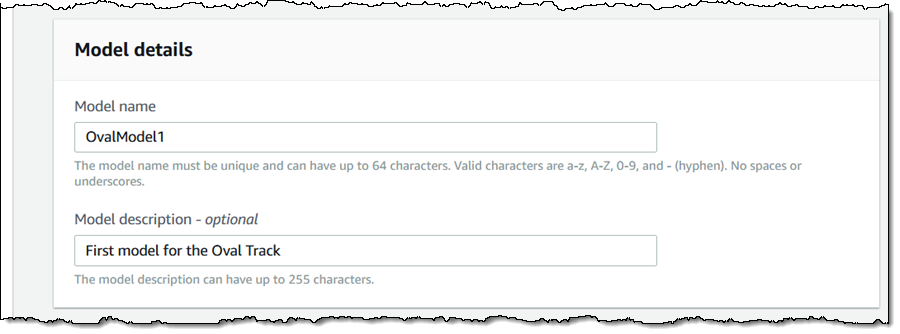

I enter a name and a description for for my model:

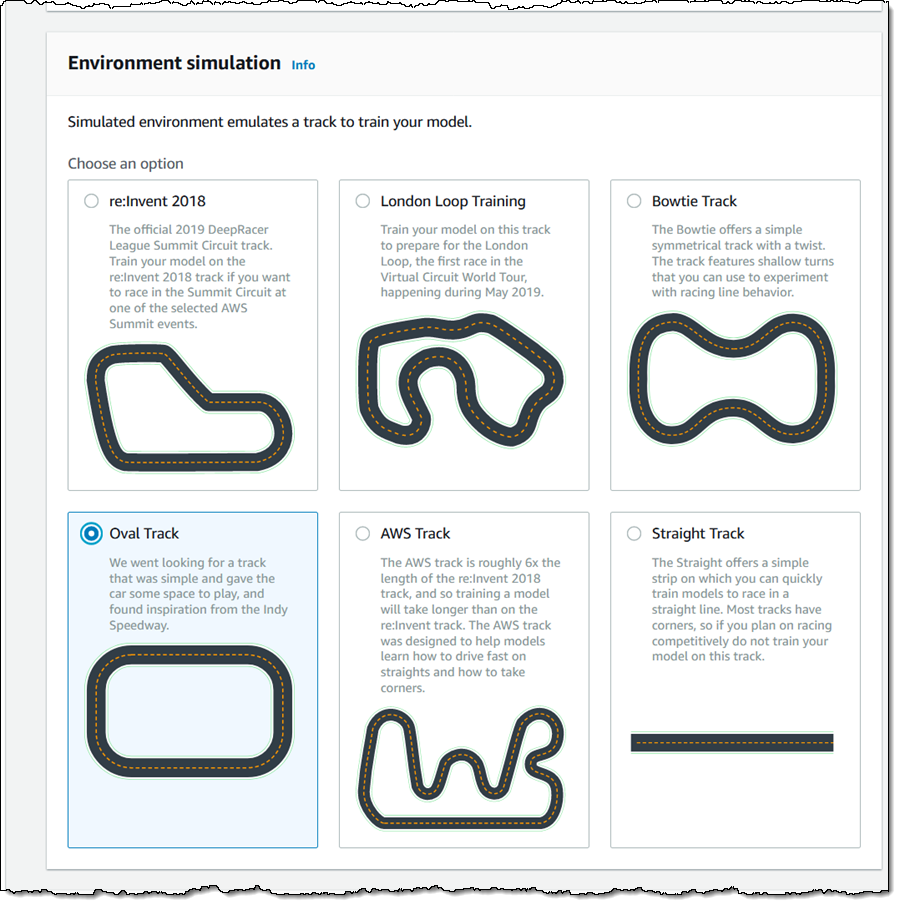

Then I pick a track (more tracks will appear throughout the duration of the Virtual League):

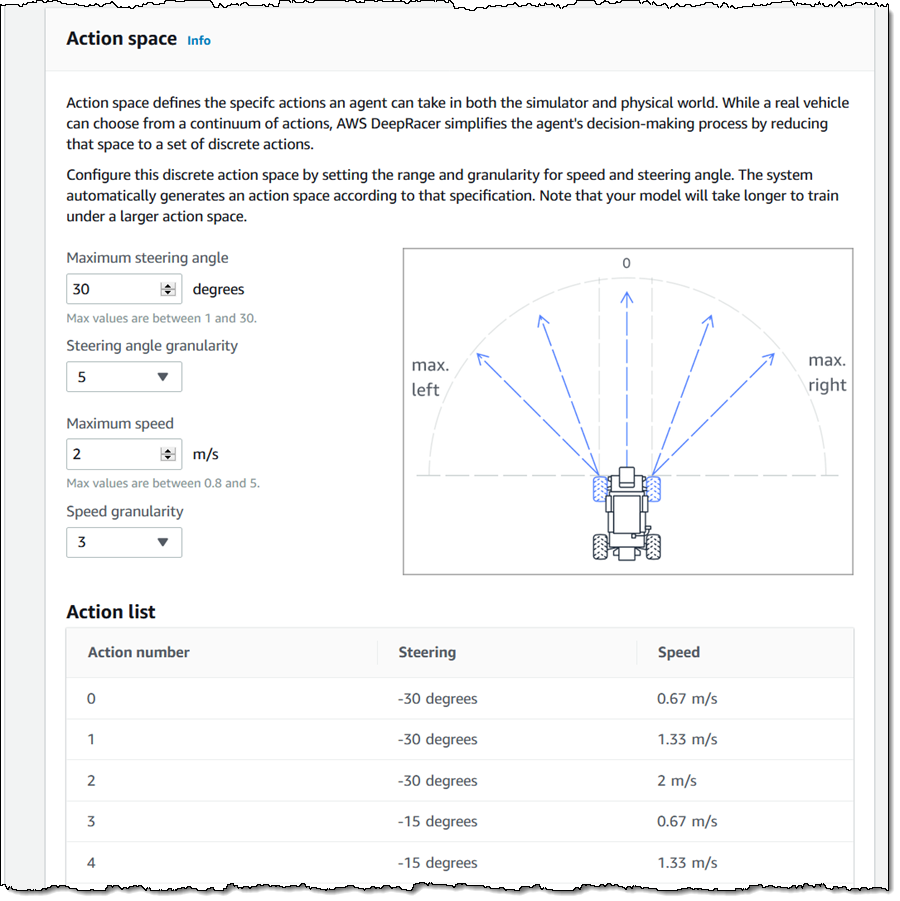

Now I define the Action space for my model. This is a set of discrete actions that my model can perform. Choosing values that increase the number of options will generally enhance the quality of my model, at the cost of additional training time:

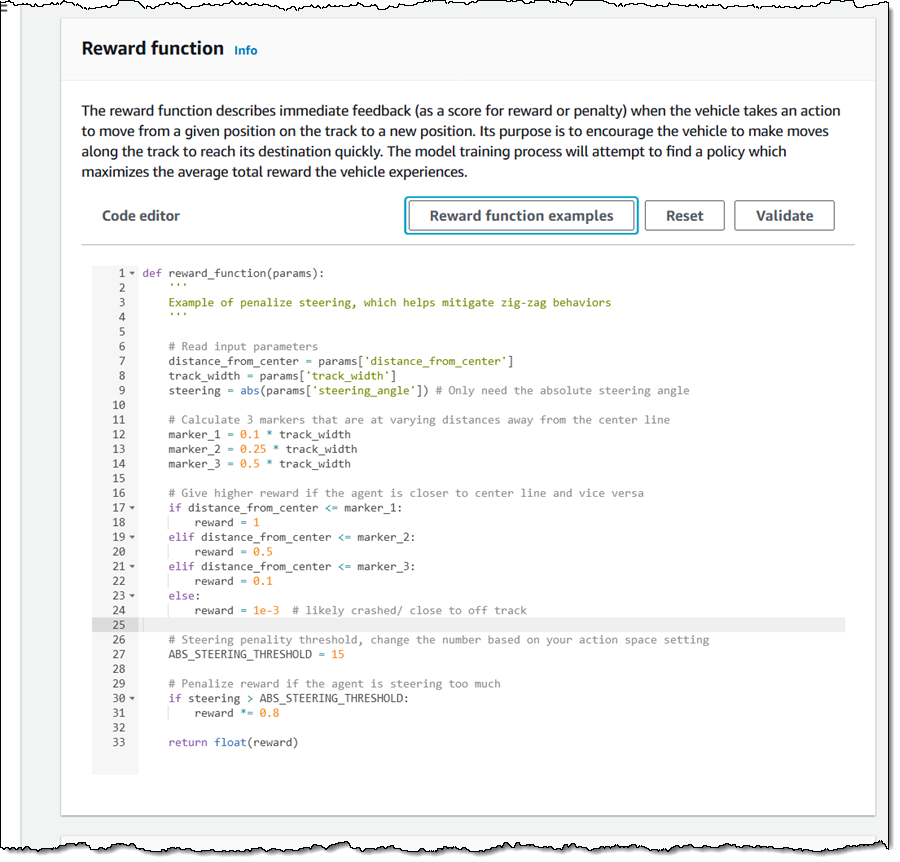

Next, I define the reward function for my model. This function evaluates the current state of the vehicle throughout the training process and returns a reward value to indicate how well the model is performing (higher rewards signify better performance). I can use one of three predefined models (available by clicking Reward function examples) as-is, customize them, or build one from scratch. I’ll use Prevent zig-zag, a sample reward function that penalizes zig-zap behavior, to get started:

The reward function is written in Python 3, and has access to parameters (track_width, distance_from_center, all_wheels_on_track, and many more) that describe the position and state of the car, and also provide information about the track.

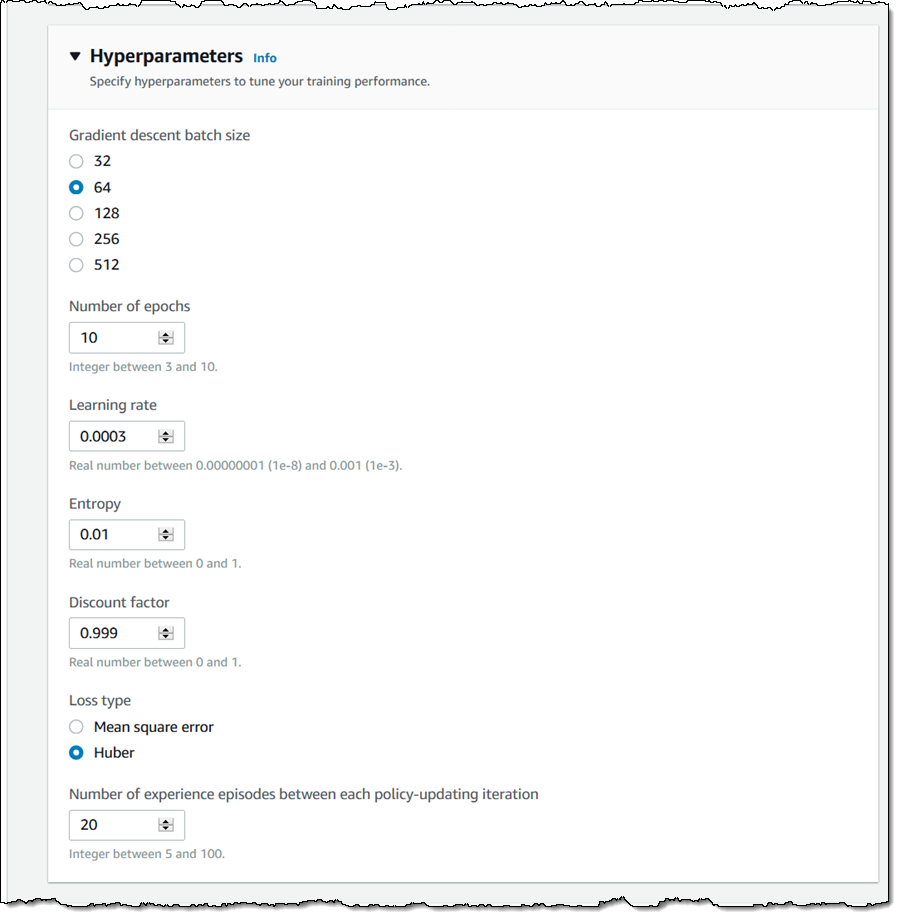

I also control a set of hyperparameters that affect the overall training performance. Since I don’t understand any of these (just being honest here), I will accept all of the defaults:

To learn more about hyperparameters, read Systematically Tune Hyperparameters.

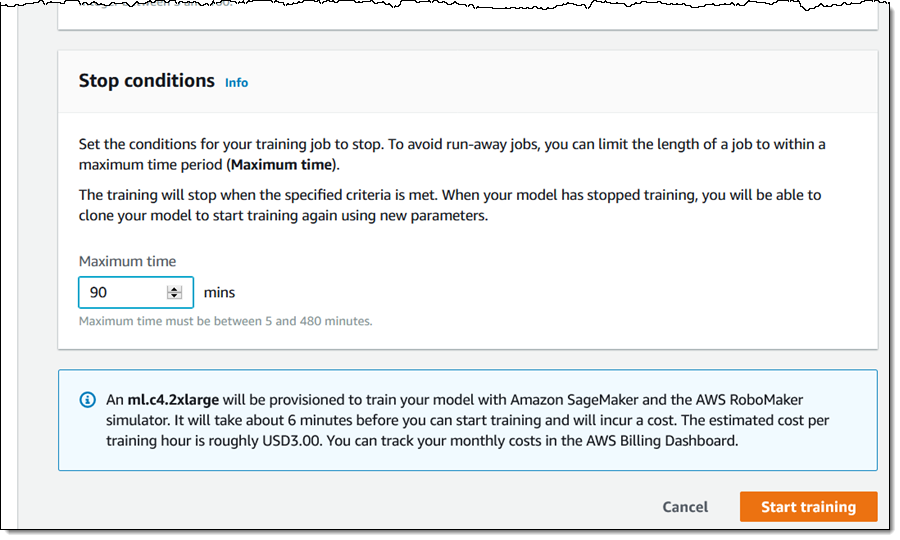

Finally, I specify a time limit for my training job, and click Start training. In general, simple models will converge in 90 to 120 minutes, but this is highly dependent on the maximum speed and the reward function.

The training job is initialized (this takes about 6 minutes), and I can track progress in the console:

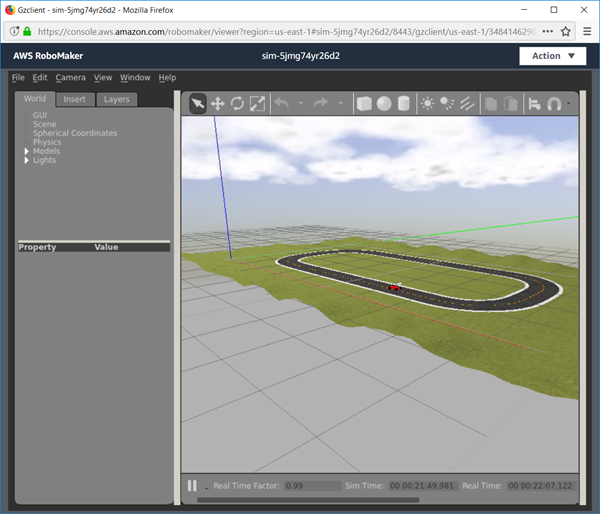

The training job makes use of AWS RoboMaker so I can also monitor it from the RoboMaker Console. For example, I can open the Gazebo window, see my car, and watch the training process in real time:

One note of caution: changing the training environment (by directly manipulating Gazebo) will adversely affect the training run, most likely rendering it useless.

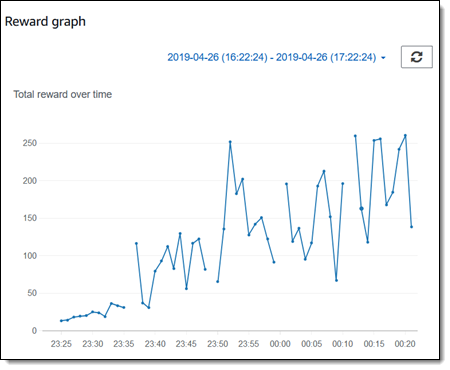

As the training progresses, the Reward graph will go up and to the right (as we often say at Amazon) if the car is learning how to stay on the track:

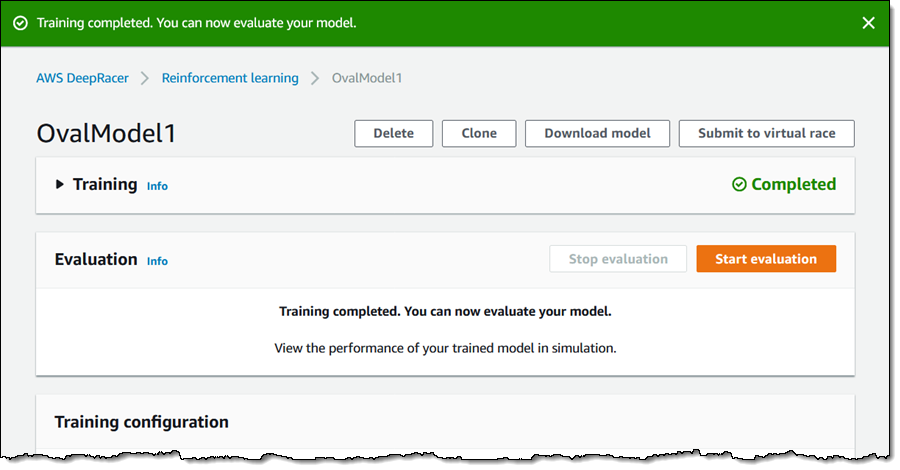

If the graph flattens out or declines precipitously and stays there, your reward function is not rewarding the desired behavior or some other setting is getting in the way. However, patience is a virtue, and there will be the occasional regression on the way to the top. After the training is complete, there’s a short pause while the new model is finalized and stored, and then it is time for me to evaluate my model by running it in a simulation. I click Start evaluation to do this:

I can evaluate the model on any of the available tracks. Using one track for training and a different one for evaluation is a good way to make sure that the model is general, and has not been overfit so that it works on just one track. However, using the same track for training and testing is a good way to get started, and that’s what I will do. I select the Oval Track and 3 trials, and click Start evaluation:

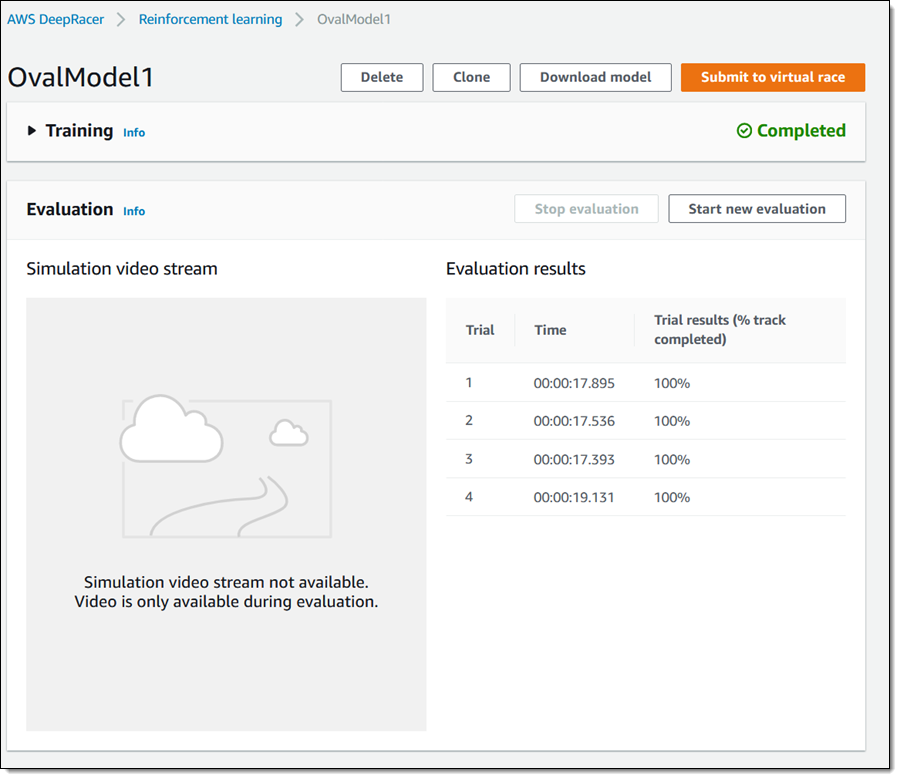

The RoboMaker simulator launches, with an hourly cost for the evaluation, as noted above. The results (lap times) are displayed when the simulation is complete:

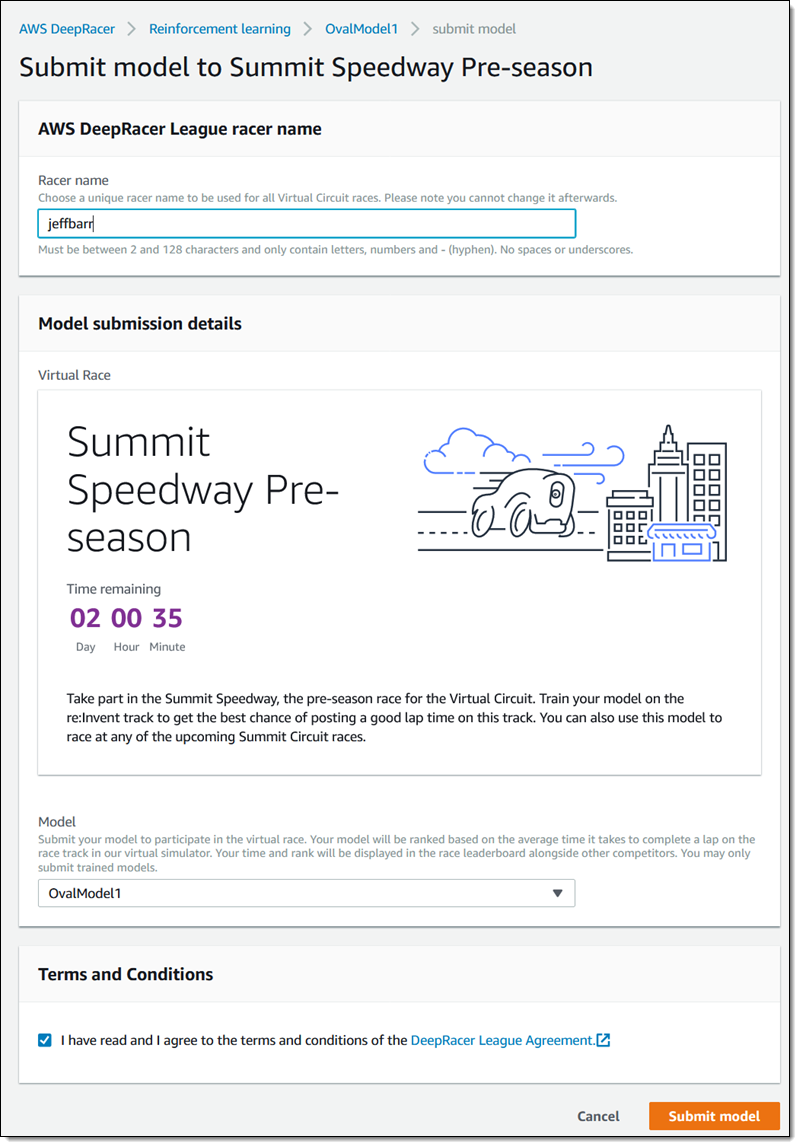

At this point I can evaluate my model on another track, step back and refine my model and evaluate it again, or submit my model to the current month’s Virtual Circuit track to take part in the DeepRacer League. To do that, I click Submit to virtual race, enter my racer name, choose a model, agree to the Ts and C’s, and click Submit model:

After I submit, my model will be evaluated on the pre-season track and my lap time will be used to place me in the Virtual Circuit Leaderboard.

Things to Know

Here are a couple of things to know about the AWS DeepRacer and the AWS DeepRacer League:

AWS Resources – Amazon SageMaker is used to train models, which are then stored in Amazon Simple Storage Service (S3). AWS RoboMaker provides the virtual track environment, which is used for training and evaluation. An AWS CloudFormation stack is used to create a Amazon Virtual Private Cloud, complete with subnets, routing tables, an Elastic IP Address, and a NAT Gateway.

Costs – You can use the DeepRacer console at no charge. As soon as you start training your first model, you will get service credits for SageMaker and RoboMaker to give you 10 hours of free training on these services. The credits are applied at the end of the month and are available for 30 days, as part of the AWS Free Tier. The DeepRacer architecture uses a NAT Gateway that carries an availability charge. Your account will automatically receive service credits to offset this charge, showing net zero on your account.

DeepRacer Cars – You can preorder your DeepRacer car now! Deliveries to addresses in the United States will begin in July 2019.

— Jeff;

Source: AWS News