Now Available – Elastic Fabric Adapter (EFA) for Tightly-Coupled HPC Workloads

We announced Elastic Fabric Adapter (EFA) at re:Invent 2018 and made it available in preview form at the time. During the preview, AWS customers put EFA through its paces on a variety of tightly-coupled HPC workloads, providing us with valuable feedback and helping us to fine-tune the final product.

Now Available

Today I am happy to announce that EFA is now ready for production use in multiple AWS regions. It is ready to support demanding HPC workloads that need lower and more consistent network latency, along with higher throughput, than is possible with traditional TCP communication. This launch lets you apply the scale, flexibility, and elasticity of the AWS Cloud to tightly-coupled HPC apps and I can’t wait to hear what you do with it. You can, for example, scale up to thousands of compute nodes without having to reserve the hardware or the network ahead of time.

All About EFA

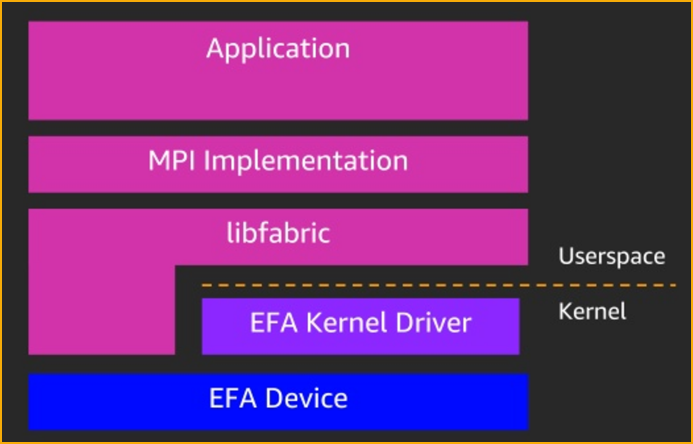

An Elastic Fabric Adapter is an AWS Elastic Network Adapter (ENA) with added capabilities (read my post, Elastic Network Adapter – High Performance Network Interface for Amazon EC2, to learn more about ENA). An EFA can still handle IP traffic, but also supports an important access model commonly called OS bypass. This model allows the application (most commonly through some user-space middleware) access the network interface without having to get the operating system involved with each message. Doing so reduces overhead and allows the application to run more efficiently. Here’s what this looks like (source):

The MPI Implementation and libfabric layers of this cake play crucial roles:

MPI – Short for Message Passing Interface, MPI is a long-established communication protocol that is designed to support parallel programming. It provides functions that allow processes running on a tightly-coupled set of computers to communicate in a language-independent way.

libfabric – This library fits in between several different types of network fabric providers (including EFA) and higher-level libraries such as MPI. EFA supports the standard RDM (reliable datagram) and DGRM (unreliable datagram) endpoint types; to learn more, check out the libfabric Programmer’s Manual. EFA also supports a new protocol that we call Scalable Reliable Datagram; this protocol was designed to work within the AWS network and is implemented as part of our Nitro chip.

Working together, these two layers (and others that can be slotted in instead of MPI), allow you to bring your existing HPC code to AWS and run it with little or no change.

You can use EFA today on c5n.18xlarge and p3dn.24xlarge instances in all AWS regions where those instances are available. The instances can use EFA to communicate within a VPC subnet, and the security group must have ingress and egress rules that allow all traffic within the security group to flow. Each instance can have a single EFA, which can be attached when an instance is started or while it is stopped.

You will also need the following software components:

EFA Kernel Module – The EFA Driver is in the Amazon GitHub repo; read Getting Started with EFA to learn how to create an EFA-enabled AMI for Amazon Linux, Amazon Linux 2, and other popular Linux distributions.

Libfabric Network Stack – You will need to use an AWS-custom version for now; again, the Getting Started document contains installation information. We are working to get our changes into the next release (1.8) of libfabric.

MPI or NCCL Implementation – You can use Open MPI 3.1.3 (or later) or NCCL (2.3.8 or later) plus the OFI driver for NCCL. We also also working on support for the Intel MPI library.

You can launch an instance and attach an EFA using the CLI, API, or the EC2 Console, with CloudFormation support coming in a couple of weeks. If you are using the CLI, you need to include the subnet ID and ask for an EFA, like this (be sure to include the appropriate security group):

After your instance has launched, run lspci | grep efa0 to verify that the EFA device is attached. You can (but don’t have to) launch your instances in a Cluster Placement Group in order to benefit from physical adjacency when every light-foot counts. When used in this way, EFA can provide one-way MPI latency of 15.5 microseconds.

You can also create a Launch Template and use it to launch EC2 instances (either directly or as part of an EC2 Auto Scaling Group) in On-Demand or Spot Form, launch Spot Fleets, and to run compute jobs on AWS Batch.

Learn More

To learn more about EFA, and to see some additional benchmarks, be sure to watch this re:Invent video (Scaling HPC Applications on EC2 w/ Elastic Fabric Adapter):

AWS Customer CFD Direct maintains the popular OpenFOAM platform for Computational Fluid Dynamics (CFD) and also produces CFD Direct From the Cloud (CFDDC), an AWS Marketplace offering that makes it easy for you to run OpenFOAM on AWS. They have been testing and benchmarking EFA and recently shared their measurements in a blog post titled OpenFOAM HPC with AWS EFA. In the post, they report on a pair of simulations:

External Aerodynamics Around a Car – This simulation scales extra-linearly to over 200 cores, gradually declining to linear scaling at 1000 cores (about 100K simulation cells per core).

Flow Over a Weir with Hydraulic Jump – This simulation (1000 cores and 100M cells) scales at between 67% and 72.6%, depending on a “data write” setting.

Read the full post to learn more and to see some graphs and visualizations.

In the Works

We plan to add EFA support to additional EC2 instance types over time. In general, we plan to provide EFA support for the two largest sizes of “n” instances of any given type, and also for bare metal instances.

— Jeff;

Source: AWS News